All Categories

Featured

Table of Contents

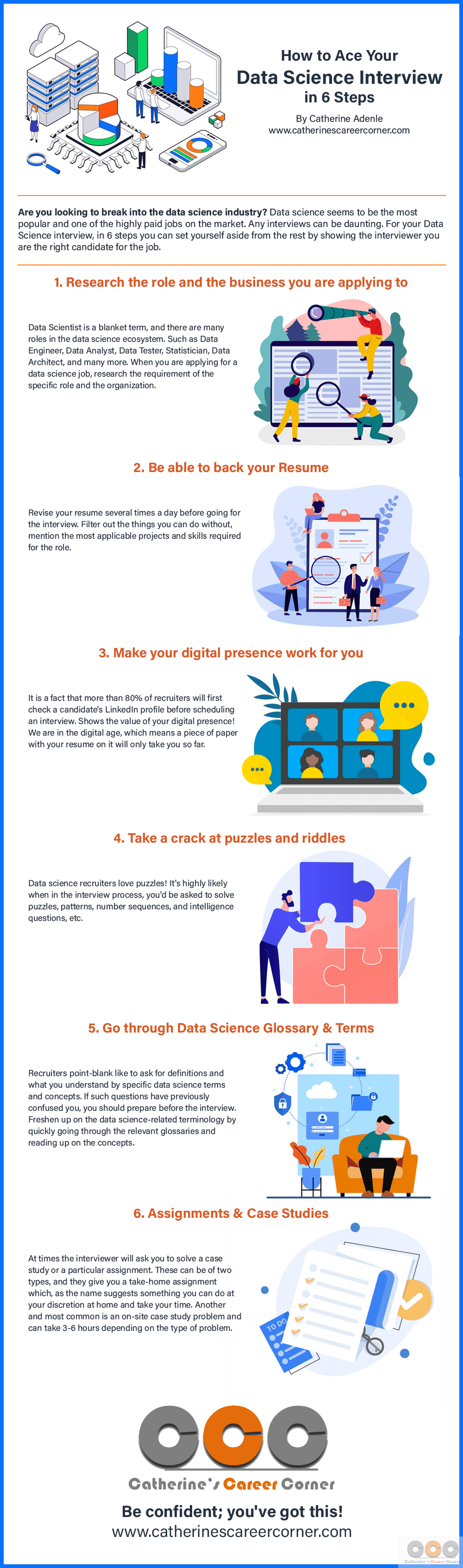

Amazon now usually asks interviewees to code in an online paper file. However this can differ; it might be on a physical white boards or an online one (Exploring Data Sets for Interview Practice). Contact your employer what it will certainly be and exercise it a great deal. Currently that you know what inquiries to expect, allow's concentrate on exactly how to prepare.

Below is our four-step prep plan for Amazon information scientist prospects. Before spending 10s of hours preparing for an interview at Amazon, you ought to take some time to make certain it's in fact the right firm for you.

Practice the technique utilizing example concerns such as those in section 2.1, or those loved one to coding-heavy Amazon placements (e.g. Amazon software application development engineer interview guide). Technique SQL and shows concerns with tool and difficult degree examples on LeetCode, HackerRank, or StrataScratch. Take an appearance at Amazon's technical subjects web page, which, although it's developed around software program advancement, need to offer you an idea of what they're looking out for.

Keep in mind that in the onsite rounds you'll likely have to code on a white boards without being able to execute it, so exercise writing via problems on paper. Supplies free courses around introductory and intermediate device discovering, as well as data cleaning, data visualization, SQL, and others.

Real-life Projects For Data Science Interview Prep

Make sure you contend the very least one tale or example for every of the principles, from a large range of placements and projects. Ultimately, a fantastic way to practice every one of these various sorts of questions is to interview yourself aloud. This may appear odd, however it will significantly improve the way you connect your answers during an interview.

One of the primary obstacles of data researcher meetings at Amazon is interacting your various responses in a way that's simple to comprehend. As a result, we strongly suggest practicing with a peer interviewing you.

Be warned, as you might come up versus the following troubles It's difficult to know if the responses you get is precise. They're not likely to have expert expertise of interviews at your target company. On peer platforms, people often squander your time by not showing up. For these factors, many prospects skip peer mock meetings and go right to simulated interviews with a specialist.

Advanced Behavioral Strategies For Data Science Interviews

That's an ROI of 100x!.

Data Scientific research is rather a big and diverse area. Therefore, it is truly difficult to be a jack of all trades. Commonly, Data Scientific research would concentrate on mathematics, computer technology and domain name competence. While I will briefly cover some computer scientific research fundamentals, the bulk of this blog will mostly cover the mathematical essentials one might either require to comb up on (and even take an entire training course).

While I understand many of you reading this are more mathematics heavy naturally, realize the bulk of data science (risk I claim 80%+) is accumulating, cleansing and handling data into a valuable form. Python and R are one of the most popular ones in the Data Science space. I have also come throughout C/C++, Java and Scala.

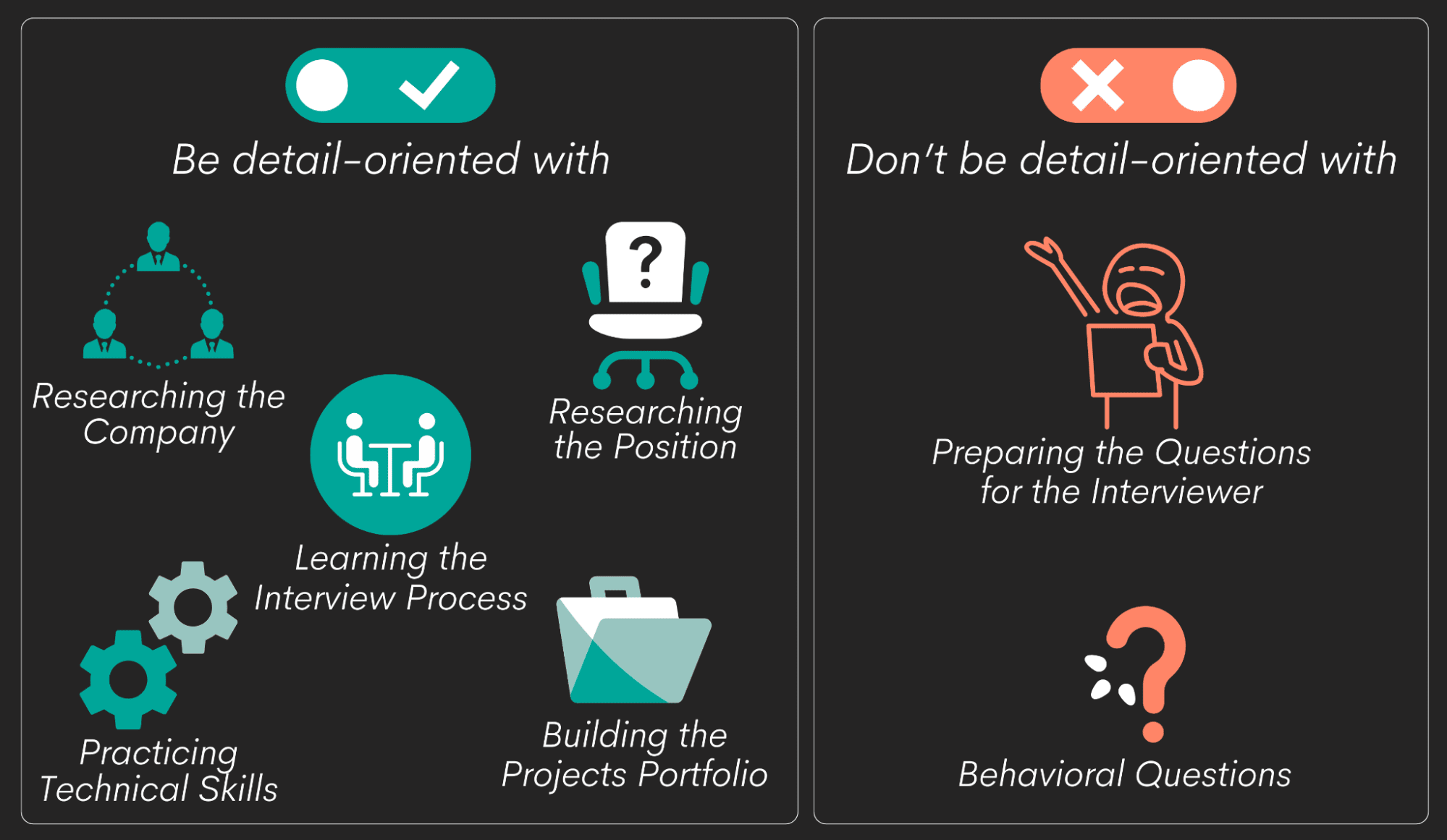

Preparing For Data Science Interviews

Typical Python collections of selection are matplotlib, numpy, pandas and scikit-learn. It is usual to see most of the data scientists remaining in either camps: Mathematicians and Data Source Architects. If you are the second one, the blog will not assist you much (YOU ARE CURRENTLY INCREDIBLE!). If you are amongst the initial group (like me), opportunities are you feel that creating a dual embedded SQL question is an utter headache.

This could either be collecting sensing unit data, analyzing sites or carrying out surveys. After collecting the information, it needs to be transformed into a functional type (e.g. key-value store in JSON Lines data). When the data is collected and placed in a usable layout, it is vital to do some information quality checks.

Creating Mock Scenarios For Data Science Interview Success

However, in situations of fraud, it is really usual to have hefty class imbalance (e.g. just 2% of the dataset is real fraudulence). Such information is essential to choose the proper options for function design, modelling and model evaluation. To find out more, check my blog site on Fraudulence Detection Under Extreme Course Inequality.

In bivariate analysis, each attribute is contrasted to other features in the dataset. Scatter matrices permit us to locate surprise patterns such as- attributes that must be crafted with each other- features that may require to be removed to prevent multicolinearityMulticollinearity is in fact a concern for multiple designs like straight regression and thus requires to be taken treatment of as necessary.

In this section, we will certainly discover some typical function engineering methods. Sometimes, the feature by itself might not provide useful details. Think of making use of net use information. You will certainly have YouTube individuals going as high as Giga Bytes while Facebook Messenger customers use a number of Huge Bytes.

An additional problem is the usage of categorical worths. While categorical values are usual in the data scientific research world, realize computers can just comprehend numbers.

Exploring Data Sets For Interview Practice

At times, having too numerous thin dimensions will certainly hamper the performance of the design. A formula commonly made use of for dimensionality reduction is Principal Elements Evaluation or PCA.

The typical classifications and their below classifications are described in this area. Filter methods are generally made use of as a preprocessing action. The option of functions is independent of any type of maker discovering formulas. Instead, functions are selected on the basis of their ratings in various analytical examinations for their correlation with the end result variable.

Common approaches under this classification are Pearson's Connection, Linear Discriminant Evaluation, ANOVA and Chi-Square. In wrapper techniques, we attempt to make use of a subset of features and train a design utilizing them. Based upon the inferences that we draw from the previous design, we decide to add or eliminate functions from your subset.

Best Tools For Practicing Data Science Interviews

Typical methods under this category are Ahead Selection, Backwards Elimination and Recursive Feature Elimination. LASSO and RIDGE are typical ones. The regularizations are provided in the formulas below as referral: Lasso: Ridge: That being said, it is to understand the auto mechanics behind LASSO and RIDGE for interviews.

Overseen Understanding is when the tags are available. Without supervision Learning is when the tags are unavailable. Obtain it? Monitor the tags! Word play here planned. That being claimed,!!! This mistake is enough for the interviewer to terminate the interview. An additional noob error people make is not stabilizing the attributes prior to running the design.

Linear and Logistic Regression are the many fundamental and frequently used Device Discovering formulas out there. Before doing any kind of evaluation One typical meeting bungle people make is starting their evaluation with an extra intricate version like Neural Network. Criteria are vital.

Table of Contents

Latest Posts

The Key Steps To Prepare For A Software Engineer Interview – Best Practices

A Day In The Life Of A Software Engineer Preparing For Interviews

How To Prepare For Data Engineer System Design Interviews

More

Latest Posts

The Key Steps To Prepare For A Software Engineer Interview – Best Practices

A Day In The Life Of A Software Engineer Preparing For Interviews

How To Prepare For Data Engineer System Design Interviews